Alexa: What Can Apollo 11 Teach Us About Our Relationship with Technology?

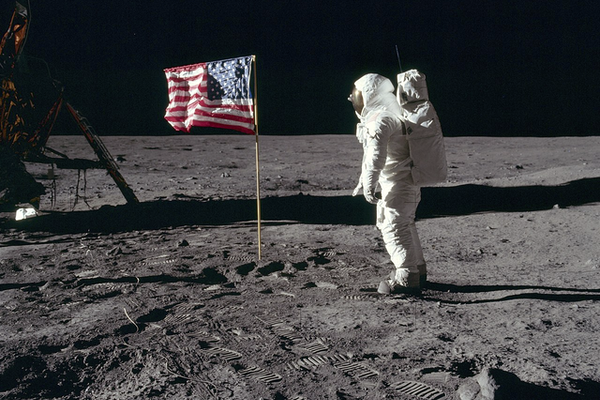

If you haven’t seen Samsung’s Apollo 11-themed television ad for its next-generation 8K TVs, it’s inspired: Families of contrasting backgrounds huddle around the tube in dark-paneled living rooms of the 1960s, eyes glistening with wonder, as they watch Neil Armstrong step onto the lunar surface. As commercials go, it’s a canny ode to American greatness past, and a stroke of advertising genius. It also reminds us that nostalgia makes for a foggy lens.

Yes, Apollo 11 was a big deal. Historian Arthur Schlesinger, Jr. rated space exploration as “the one thing for which this century will be remembered 500 years from now … ” and saw the July 1969 moon landing as the key event. Its success sealed America’s standing as the planet’s unrivaled leader in science and technology, and today’s media lookbacks, including major TV documentaries, make that case. The better of these reports highlight the turbulent times in which Apollo took flight, as American cities boiled with protests for racial and economic justice and against the Vietnam War, and concerns about the environment were on the rise. Yet, it’s still easy to gloss over the fact that, for most of the 1960s, most Americans opposed Washington spending billions of dollars on space.

What also gets overlooked is Apollo’s importance as a pivot in our national thinking about science and technology. By the late 1960s, young Americans, in particular, had come to see our rivalries with the Soviet Union—“space race” and “nuclear arms race”—as stand-ins for an ur-struggle between humankind and its machines. Baby boomers, like me, loved their incredible shrinking transistor radios, out-of-this-world four-track car stereos, and Tang, the breakfast drink of the astronauts. But we’d also seen Stanley Kubrick’s “2001: A Space Odyssey” (1968), and knew Hal the computer was not to be trusted. Harvard behavioral psychologist B.F. Skinner wryly fingered the irony of the age: “The real question is not whether machines think but whether men do.” Given the times, a healthy skepticism was in order.

In today’s digital age, we have renewed cause for pause given the way our machines have snuggled into our daily lives, a Siri here, an Alexa, smartwatch or home-security system there. The new intimacy begs a question that animated the early days of the Space Age: How do we harness technology’s promethean powers before they harness us?

C.P. Snow joined that debate in 1959, when the British physicist and novelist argued that a split between two “polar” groups, “literary intellectuals” and “physical scientists,” was crippling the West’s response to the Cold War. In “Two Cultures,” a landmark lecture at Cambridge University, Snow said “a gulf of mutual incomprehension” separated the sides, “sometimes [involving] … hostility and dislike, but most of all lack of understanding.” Scientists in the U.K. and throughout the West had “the future in their bones,” while traditionalists were “wishing the future did not exist.” A cheerleader for science, Snow nonetheless warned that the parties had better heal their breach or risk getting steamrolled by Russia’s putative juggernaut.

Without “traditional culture,” Snow argued, the scientifically-minded lack “imaginative understanding.” Meanwhile, traditionalists,“the majority of the cleverest people,” had “about as much insight into[ modern physics] as their Neolithic ancestors would have had.” Snow’s point: Only an intellectually integrated culture can work at full capacity to mesh solutions to big problems with its fundamental human values.

On this side of the pond, President Eisenhower wondered where the alliance among science, industry and government was propelling America. In his 1961 farewell address, the former five-star general warned of a military-industrial complex that could risk driving the United States toward an undemocratic technocracy. By that time, of course, the Russians had already blunted Ike’s message thanks to their record of alarming firsts, including Sputnik I, the world’s first earth-orbiting satellite, in October 1957, and the dog-manned Sputnik II the next month. The nation’s confidence rattled, Eisenhower had ramped up the space program and launched NASA in 1958.

Talk of a technology gap, including a deeply scary “missile gap,” with Russia gave the Soviets more credit than they deserved, as it turned out, but the specter of a nuclear-tipped foe raining nuclear warheads on us was impossible for political leaders to ignore. Meanwhile, the boomer generation got enlisted in a cultural mobilization. Under Eisenhower, public school students learned to “duck and cover.” When John Kennedy replaced him in 1961, our teachers prepared us to confront the Soviet menace by having us run foot races on the playground or hurl softballs for distance; in the classroom, they exhorted us to buckle down on our math and science lest the enemy, which schooled their kids six day a week, clean our clocks.

In April 1961, the Soviets sprang another surprise—successfully putting the first human, cosmonaut Yuri Gagarin, into low-Earth orbit. President Kennedy countered on May 25, telling a joint session of Congress that “if we are to win the battle that is now going on around the world between freedom and tyranny …” one of the country’s goals should be “landing a man on the moon and returning him safely to the earth” by the end of the 1960s. It was a bold move, requiring a prodigious skillset we didn’t have and would have to invent.

The fact that we pulled off Apollo 11 at all is a testament to American ingenuity and pluck. Yet while the successful moon landing decided the race for space in America’s favor, it didn’t undo our subliminal angst about the tightening embrace of technology.

The mechanized carnage of World War II had seen to that. The war had killed tens of millions of people worldwide, including over 400,000 Americans, and the atomic bombs dropped on Hiroshima and Nagasaki opened humankind to a future that might dwarf such numbers. In a controversial 1957 essay, author Norman Mailer captured the sum of all fears: In modern warfare we could well “… be doomed to die as a cipher in some vast statistical operation in which our teeth would be counted, and our hair would be saved, but our death itself would be unknown, unhonored, and unremarked . . . a death by deus ex machina in a gas chamber or a radioactive city. …”

As the United States and Soviet Russia kept up their decades-long nuclear stalemate, the American mind wrestled with a sublime paradox: Only modern technology, the source of our largest fears, could protect and pacify us in the face of the dangers of modern technology.

Today, we grapple with a variation on that theme. Fears of nuclear annihilation have given way to concerns less obtrusively lethal but potentially devastating: cyber-meddling in our elections, out-and-out cyberwarfare, and nagging questions about what our digital devices, social media, and today’s information tsunami may be doing to our brains and social habits. In 2008, as the advent of the smartphone accelerated the digital age, technology writer Nicholas Carr wondered about the extent to which our digital distractions had eroded our capacity to store the accreted knowledge, what some call crystallized intelligence, that supports civilized society. The headline of Carr’s article in The Atlantic put the point bluntly: “Is Goggle Making Us Stupid?”

Accordingly, MITI social psychologist Sherry Turkle has argued that too much digital technology robs us of our fundamental human talent for face-to-face conversation, reduces the solitude we need for the contemplation that adds quality to what we have to say, and contributes to a hive-mindedness that can curtail true independence of thought and action.

That’s a head-twisting departure from the American tradition of the empowered individual–an idea that once inspired our intrepid moonwalkers. In his 1841 essay “Self-Reliance,” Ralph Waldo Emerson advised America to stand on its own two feet and eschew Europe as a source for ideas and intellectual custom; rather, we should establish our own culture with the individual as the sole judge of meaning, and get on with creating a new kind of nation, unshackled by the past. “There is a time in every man’s education,” wrote Emerson, “when he arrives at the conviction that envy is ignorance; that imitation is suicide; that he must take himself for better, for worse, as his portion. …”

A half-century later, in a globalizing and technologically more complex world, philosopher William James applied the Goldie Locks principle to citizen-philosophers. For Americans, he argued, European-inspired “rationalism” (being guided by high-minded principle) was too airy, “empiricism” (just the facts, please) was too hard—but “pragmatism, ” (a mix of principles and what really works, with each individual in charge of deriving meaning) was just right. James sought to meld “the scientific loyalty to the facts” and “the old confidence in human values and the resultant spontaneity, whether of the religious or of the romantic type.”

Maybe this is what James had in mind when he reached for a description of America’s democratic inner life: “For the philosophy which is so important in each of us is not a technical matter; it is our more or less dumb sense of what life honestly and deeply means. It is only partly got from books; it is our individual way of just seeing and feeling the total push and pressure of the cosmos.”

Today, the gap between our technological capabilities and our human means of coping with them is only likely to widen. As Michiko Kakutani pointed out in her 2018 book “The Death of Truth”: “Advances in virtual reality and machine-learning systems will soon result in fabricated images and videos so convincing that they may be difficult to distinguished from the real thing … between the imitation and the real, the fake and the true.”

(If you’ve been keeping up with developments in “deepfake” technology, you know that a scary part of the future is already at the disposal of hackers foreign and domestic.)

In a sense, today’s digital dilemma is the reverse of what C.P. Snow talked about 60 years ago. Our technology innovators still have the future in their bones, to be sure; but overall, the health of our society may rest on the degree to which we don’t make the world a more convivial place for science per se, but rather deploy our humanistic traditions to make our fast-moving technology best serve and sustain the human enterprise.

At a broader level, of course, Snow was right: “To say we have to educate ourselves or perish, is a little more melodramatic than the facts warrant,” he said. “To say, we have to educate ourselves or watch a steep decline in our own lifetime, is about right.” And we can’t truly do that without prioritizing a more comprehensive partnership between the science that pushes technology ahead and the humanities that help us consider the wisdom of such advances in light of the best that has been thought and said.

And there, the Apollo program serves as a helpful metaphor. In 1968, as Apollo 8 orbited the moon in a warm-up run for Apollo 11, astronaut Bill Anders snapped “Earthrise,” the iconic photograph showing our serene blue-white sphere hanging in lonely space. Often credited with helping to launch modern environmentalism, the image underscored what human beings have riding on the preservation of their home planet. The turn in our thinking was reflected 16 months later when Earth Day was born. And ironically, perhaps, the Apollo program spun off technology—advanced computing and micro-circuitry—that helped ignite today’s disorienting digital explosion, but also produced applications for environmental science and engineering, for example, that promote the public good.

Meanwhile, our shallow grasp of digital technology presents problems that are deeper than we like to think when we think about them at all. As it stands, most Americans, this one included, have only the shakiest handle on how digital technology works its influences on us, and even the experts are of mixed minds about its protean ways.

That order of technological gap is what worried Aldous Huxley, author of the classic dystopian novel “Brave New World.” As Huxley told Mike Wallace, in a 1958 interview, “advancing technology” has a way of taking human beings by surprise. “This has happened again and again in history,” he said. “Technology … changes social conditions and suddenly people have found themselves in a situation which they didn’t foresee and doing all sorts of things they didn’t really want to do.” Unscrupulous leaders have used technology and the propaganda it makes possible in subverting the “rational side of man and appealing to his subconscious and his deeper emotions … and so, making him actually love his slavery.”

It may not be as bad as all that with us—not yet, anyway. But it does point back to our central question: Have our digital devices gotten the drop on us—or can we train ourselves to use them to our best advantage? This summer’s Apollo 11 anniversary is as timely an event as any to remind us of what’s at stake in managing digital technology’s race for who or what controls our personal and collective inner space.