AI the Latest Instance of our Capacity for Innovation Outstripping our Capacity for Ethics

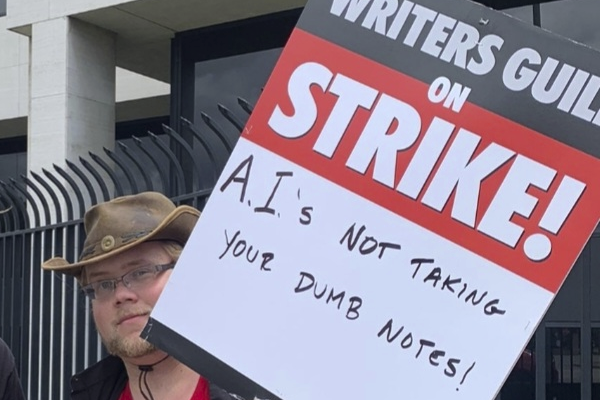

The eagerness with which movie and television studios have proposed to use artificial intelligence to write content collides with the concern of Writers Guild members for their employment security and pay in the latest episode of technological innovation running ahead of ethical deliberation.

Regarding modern technology, the psychologist Steven Pinker and the economist/environmentalist E. F. Schumacher have expressed opposite opinions. In his Enlightenment Now: The Case for Reason, Science, Humanism, and Progress (2018), the former is full of optimism--e.g.,“technology is our best hope of cheating death”--but many decades earlier Schumacher stated that it was “the greatest destructive force in modern society.” And he warned, “Whatever becomes technologically possible . . . must be done. Society must adapt itself to it. The question whether or not it does any good is ruled out.”

Now, in 2023, looking over all the technological developments of the last century, I think Schumacher’s assessment was more accurate. I base this judgment on recent developments in spyware and Artificial Intelligence (AI). They have joined the ranks of nuclear weapons, our continuing climate crisis, and social media in inclining me to doubt humans’ ability to control the Frankensteinian monsters they have created. The remainder of this essay will indicate why I have made this judgment.

Before taking up the specific modern technological developments mentioned above, our main failing can be stated: The structures that we have developed to manage technology are woefully inadequate. We have possessed neither the values nor wisdom necessary to do so. Several quotes reinforce this point.

One is General Omar Bradley’s: "Ours is a world of nuclear giants and ethical infants. If we continue to develop our technology without wisdom or prudence, our servant may prove to be our executioner."

More recently, psychologist and futurist Tom Lombardo has observed that “the overriding goal” of technology has often been “to make money . . . without much consideration given to other possible values or consequences.”

Finally, the following words of Schumacher are still relevant:

“The exclusion of wisdom from economics, science, and technology was something which we could perhaps get away with for a little while, as long as we were relatively unsuccessful; but now that we have become very successful, the problem of spiritual and moral truth moves into the central position. . . . Ever-bigger machines, entailing ever-bigger concentrations of economic power and exerting ever-greater violence against the environment, do not represent progress: they are a denial of wisdom. Wisdom demands a new orientation of science and technology towards the organic, the gentle, the nonviolent, the elegant and beautiful.”

“Woefully inadequate” structures to oversee technological developments. How so? Some 200 governments are responsible for overseeing such changes in their countries. In capitalist countries, technological advances often come from individuals or corporations interested in earning profits--or sometimes from governments sponsoring research for military reasons. In countries where some form of capitalism is not dominant, what determines technological advancements? Military needs? The whims of authoritarian rulers or elites? Show me a significant country where the advancement of the common good is seriously considered when contemplating new technology.

Two main failings leap out at us. The first, Schumacher observed a half century ago--capitalism’s emphasis on profits rather than wisdom. Secondly--and it’s connected with a lack of wisdom--too many “bad guys,” leaders like Hitler, Stalin, Putin, and Trump, have had tremendous power yet poor values.

Now, however, on to the five specific technological developments mentioned above. First, nuclear weapons. From the bombings of Hiroshima and Nagasaki in 1945 until the Cuban Missile Crisis in 1962, concerns about the unleashing of a nuclear holocaust topped our list of possible technological catastrophes. In 1947, the Bulletin of the Atomic Scientists established its Doomsday Clock, “a design that warns the public about how close we are to destroying our world with dangerous technologies of our own making.” The scientists set the clock at seven minutes to midnight. “Since then the Bulletin has reset the minute hand on the Doomsday Clock 25 times,” most recently in January of this year when it was moved to 90 seconds to midnight--“the closest to global catastrophe it has ever been.” Why the move forward? “Largely (though not exclusively) because of the mounting dangers of the war in Ukraine.”

Second, our continuing climate crisis. It has been ongoing now for at least four decades. The first edition (1983) of The Twentieth Century: A Brief Global History noted that “the increased burning of fossil fuels might cause an increase in global temperatures, thereby possibly melting the polar ice caps, and flooding low-lying parts of the world.” The third edition (1990) expanded the treatment by mentioning that by 1988 scientists “concluded that the problem was much worse than they had earlier thought. . . . They claimed that the increased burning of fossil fuels like coal and petroleum was likely to cause an increase in global temperatures, possibly melting the polar ice caps, changing crop yields, and flooding low-lying parts of the world.” Since then the situation has only grown worse.

Third, the effects of social media. Four years ago I quoted historian Jill Lepore’s highly-praised These Truths: A History of the United States (2018): “Hiroshima marked the beginning of a new and differently unstable political era, in which technological change wildly outpaced the human capacity for moral reckoning.” By the 1990s, she observed that “targeted political messaging through emerging technologies” was contributing to “a more atomized and enraged electorate.” In addition, social media, expanded by smartphones, “provided a breeding ground for fanaticism, authoritarianism, and nihilism.”

Moreover, the Internet was “easily manipulated, not least by foreign agents. . . . Its unintended economic and political consequences were often dire.” The Internet also contributed to widening economic inequalities and a more “disconnected and distraught” world. Internet information was “uneven, unreliable,” and often unrestrained by any type of editing and fact-checking. The Internet left news-seekers “brutally constrained,” and “blogging, posting, and tweeting, artifacts of a new culture of narcissism,” became commonplace. So, too did Internet-related companies that feed people only what they wanted to see and hear. Further, social media “exacerbated the political isolation of ordinary Americans while strengthening polarization on both the left and the right. . . . The ties to timeless truths that held the nation together, faded to ethereal invisibility.”

Similar comments came from the brilliant and humane neurologist Oliver Sacks, who shortly before his death in 2015 stated that people were developing “no immunity to the seductions of digital life” and that “what we are seeing—and bringing on ourselves—resembles a neurological catastrophe on a gigantic scale.”

Fourth, spyware. Fortunately, in the USA and many other countries independent media still exists. Various types of such media are not faultless, but they are invaluable in bringing us truths that would otherwise be concealed. PBS is one such example.

Two of the programs it produces, the PBS Newshour and Frontline have helped expose how insidious spyware has become. In different countries, its targets have included journalists, activists, and dissidents. According to an expert on The Newshour,

“The use of spyware has really exploded over the last decade. One minute, you have the most up-to-date iPhone, it's clean, sitting on your bedside table, and then, the next minute, it's vacuuming up information and sending it over to some security agency on the other side of the planet.”

The Israeli company NSO Group has produced one lucrative type of spyware called Pegasus. According to Frontline, it “was designed to infect phones like iPhones or Androids. And once in the phone, it can extract and access everything from the device: the phone books, geolocation, the messages, the photos, even the encrypted messages sent by Signal or WhatsApp. It can even access the microphone or the camera of your phone remotely.” Frontline quotes one journalist, Dana Priest of The Washington Post, as stating, “This technology, it's so far ahead of government regulation and even of public understanding of what's happening out there.”

The fifth and final technological development to consider is Artificial Intelligence (AI). During the past year, media has been agog with articles on it. Several months ago on this website I expressed doubts that any forces will be able to limit the development and sale of a product that makes money, even if it ultimately harms the common good.

More recently (this month) the PBS Newshour again provided a public service when it conducted two interviews on AI. The first was with “Geoffrey Hinton, one of the leading voices in the field of AI,” who “announced he was quitting Google over his worries about what AI could eventually lead to if unchecked.”

Hinton told the interviewer (Geoff Bennett) that “we're entering a time of great uncertainty, where we're dealing with kinds of things we have never dealt with before.” He recognized various risks posed by AI such as misinformation, fraud, and discrimination, but there was one that he especially wanted to highlight: “the risk of super intelligent AI taking over control from people.” It was “advancing far more quickly than governments and societies can keep pace with.” While AI was leaping “forward every few months,” needed restraining legislation and international treaties could take years.

He also stated that because AI is “much smarter than us, and because it's trained from everything people ever do . . . it knows a lot about how to manipulate people, and “it might start manipulating us into giving it more power, and we might not have a clue what's going on.” In addition, “many of the organizations developing this technology are defense departments.” And such departments “don't necessarily want to build in, be nice to people, as the first rule. Some defense departments would like to build in, kill people of a particular kind.”

Yet, despite his fears, Hinton thinks it would be a “big mistake to stop developing” AI. For “it's going to be tremendously useful in medicine. . . . You can make better nanotechnology for solar panels. You can predict floods. You can predict earthquakes. You can do tremendous good with this.”

What he would like to see is equal resources put into both developing AI and “figuring out how to keep it under control and how to minimize bad side effects of it.” He thinks “it's an area in which we can actually have international collaboration, because the machines taking over is a threat for everybody.”

The second PBS May interview on AI was with Gary Marcus, another leading voice in the field. He also perceived many possible dangers ahead and advocated international controls.

Such efforts are admirable, but are the hopes for controls realistic? Looking back over the past century, I am more inclined to agree with General Omar Bradley--we have developed “our technology without wisdom or prudence,” and we are “ethical infants.”

In the USA, we are troubled by divisive political polarization; neither of the leading candidates for president in 2024 has majority support in the polls; and Congress and the Supreme Court are disdained by most people. Our educational systems are little concerned with stimulating thinking about wisdom or values. If not from the USA, from where else might global leadership come? From Russia? From China? From India? From Europe? From the UN? The past century offers little hope that it would spring from any of these sources.

But both Hinton and Marcus were hopeful in their PBS interviews, and just because past efforts to control technology for human betterment were generally unsuccessful does not mean we should give up. Great leaders like Abraham Lincoln, Franklin Roosevelt, and Nelson Mandela did not despair even in their nations’ darkest hours. Like them, we too must hope for--and more importantly work toward--a better future.